Let’s assume you want to send your logs to Elasticsearch, so you can search or analyze them in realtime. If your Elasticsearch cluster is in a remote location (EC2?) or is our log analytics service, Logsene (which exposes the Elasticsearch API), you might need to forward your data over an encrypted channel.

There’s more than one way to forward over SSL, and this post is part 1 of a series explaining how.

update: part 2 is now available!

Today’s method is about sending data over HTTPS to Elasticsearch (or Logsene), instead of plain HTTP. You’ll need two pieces to achieve this:

- a tool that can send logs over HTTPS

- the Elasticsearch REST API exposed over HTTPS

You can build your own tool or use existing ones. In this post we’ll show you how to use rsyslog’s Elasticsearch output to do that. For the API, you can use Nginx or Apache as a reverse proxy for HTTPS in front of your Elasticseach, or you can use Logsene’s HTTPS endpoint:

Rsyslog Configuration

To get rsyslog’s omelasticsearch plugin, you need at least version 6.6. HTTPS support was just added to master, and it’s expected to land in version 8.2.0. Once that is up, you’ll be able to use the Ubuntu, Debian or RHEL/CentOS packages to install both the base rsyslog and the rsyslog-elasticsearch packages you need. Otherwise, you can always install from sources:

– clone from the rsyslog github repository

– run autogen.sh --enable-elasticsearch && make && make install (depending on your system, it might ask for some dependencies)

With omelasticsearch in place (the om part comes from output module, if you’re wondering about the weird name), you can try the configuration below to take all your logs from your local /dev/log and forward them to Elasticsearch/Logsene:

# load needed input and output modules module(load="imuxsock.so") # listen to /dev/log module(load="omelasticsearch.so") # provides Elasticsearch output capability # template that will build a JSON out of syslog # properties. Resulting JSON will be in Logstash format # so it plays nicely with Logsene and Kibana template(name="plain-syslog" type="list") { constant(value="{") constant(value=""@timestamp":"") property(name="timereported" dateFormat="rfc3339") constant(value="","host":"") property(name="hostname") constant(value="","severity":"") property(name="syslogseverity-text") constant(value="","facility":"") property(name="syslogfacility-text") constant(value="","syslogtag":"") property(name="syslogtag" format="json") constant(value="","message":"") property(name="msg" format="json") constant(value=""}") } # send resulting JSON documents to Elasticsearch action(type="omelasticsearch" template="plain-syslog" # Elasticsearch index (or Logsene token) searchIndex="YOUR-LOGSENE-TOKEN-GOES-HERE" # bulk requests bulkmode="on" queue.dequeuebatchsize="100" # buffer and retry indefinitely if Elasticsearch is unreachable action.resumeretrycount="-1" # Elasticsearch/Logsene endpoint server="logsene-receiver.sematext.com" serverport="443" usehttps="on" )

Exploring Your Data

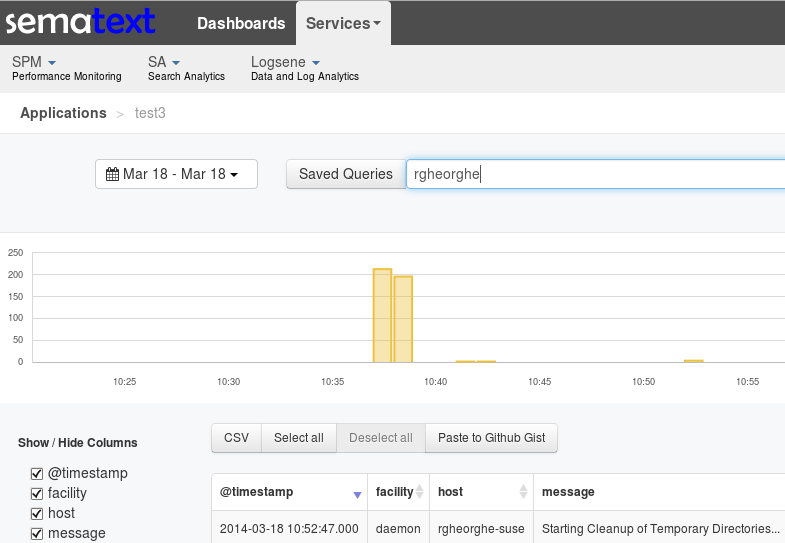

After restarting rsyslog, you should be able to see your logs flowing in the Logsene UI, where you can search and graph them:

If you prefer Logsene’s Kibana UI, or you run your own Elasticsearch cluster, you can run make your own Kibana connect to the HTTPS endpoint just like rsyslog or Logsene’s native UI do.

Wrapping Up

If you’re using Logsene, all you need to do is to make sure you add your Logsene application token as the Elasticsearch index name in rsyslog’s configuration.

If you’re running your own Elasticsearch cluster, there are some nice tutorials about setting up reverse HTTPS proxies with Nginx and Apache respectively. You can also try Elasticsearch plugins that support HTTPS, such as the jetty and security plugins.

Feel free to contact us if you need any help. We’d be happy to answer any Logsene questions you may have, as well as help you with your local setup through professional services and production support. If you just find this stuff exciting, you may want to join us, wherever you are.

Stay tuned for part 2, which will show you how to use RFC-5425 TLS syslog to encrypt your messages from one syslog daemon to the other.