Just the other day we wrote about Sensei, the new distributed, real-time full-text search database built on top of Lucene and here we are again writing about another “new” distributed, real-time, full-text search server also built on top of Lucene: SolrCloud.

In this post we’ll share some interesting SolrCloud bits and pieces that matter mostly to those working with large data and query volumes, but that all search lovers should find really interesting, too. If you have any questions about what we wrote (or did not write!) in this post, please leave a comment – we’re good at following up to comments! Or just ask @sematext!

Please note that functionality described in this post is now part of trunk in Lucene and Solr SVN repository. This means that it will be available when Lucene and Solr 4.0 are released, but you can also use trunk version just like we did, if you don’t mind living on the bleeding edge.

Recently, we were given the opportunity to once again use big data (massive may actually be more descriptive of this data volume) stored in a HBase cluster and search. We needed to design a scalable search cluster capable of elastically handling future data volume growth. Because of the huge data volume and high search rates our search system required the index to be sharded. We also wanted the indexing to be as simple as possible and we also wanted a stable, reliable, and very fast solution. The one thing we did not want to do is reinvent the wheel. At this point you may ask why we didn’t choose ElasticSearch, especially since we use ElasticSearch a lot at Sematext. The answer is that when we started the engagement with this particular client a whiiiiile back when ElasticSearch wasn’t where it is today. And while ElasticSearch does have a number of advantages over the old master-slave Solr, with SolrCloud being in the trunk now, Solr is again a valid choice for very large search clusters.

And so we took the opportunity to use SolrCloud and some of its features not present in previous versions of Solr. In particular, we wanted to make use of Distributed Indexing and Distributed Searching, both of which SolrCloud makes possible. In the process we looked at a few JIRA issues, such as SOLR-2358 and SOLR-2355, and we got familiar with relevant portions of SolrCloud source code. This confirmed SolrCloud would indeed satisfy our needs for the project and here we are sharing what we’ve learned.

Our Search Cluster Architecture

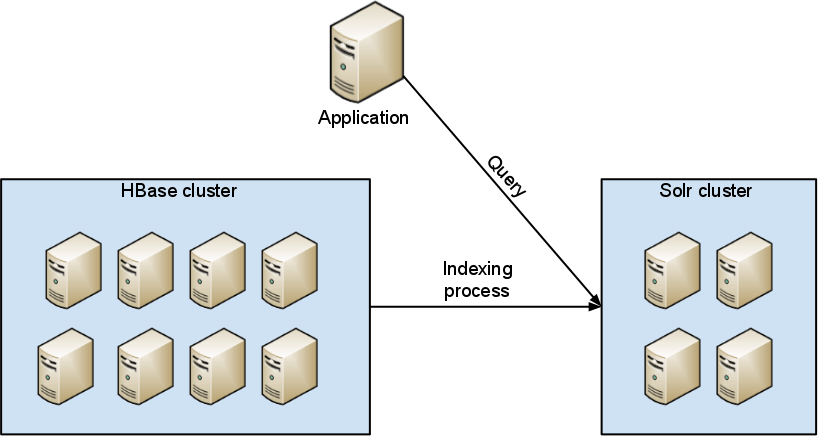

Basically, we wanted the search cluster to look like this:

Simple? Yes, we like simple. Who doesn’t! But let’s peek inside that “Solr cluster” box now.

SolrCloud Features and Architecture

Some of the nice things about SolrCloud are:

- centralized cluster configuration

- automatic node fail-over

- near real time search

- leader election

- durable writes

- …

Furthermore, SolrCloud can be configured to:

- have multiple index shards

- have one or more replicas of each shards

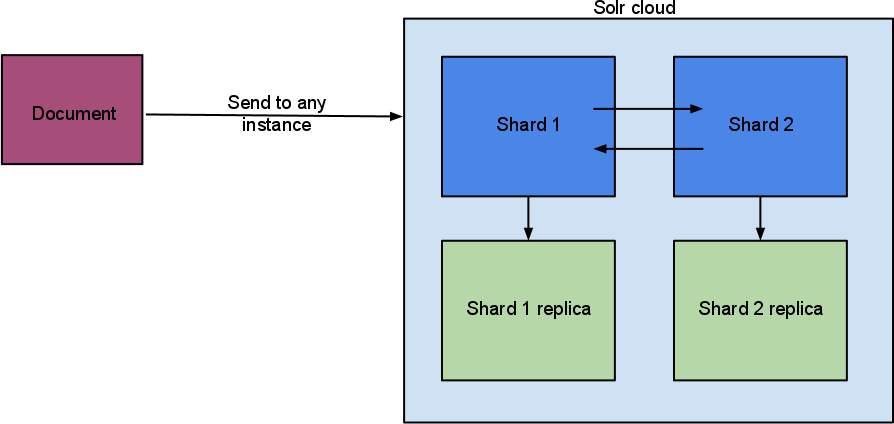

Shards and Replicas are arranged into Collections. Multiple Collections can be deployed in a single SolrCloud cluster. A single search request can search multiple Collections at once, as long as they are compatible. The diagram below shows a high-level picture of how SolrCloud indexing works.

As the above diagram shows, documents can be sent to any SolrCloud node/instance in the SolrCloud cluster. Documents are automatically forwarded to the appropriate Shard Leader (labeled as Shard 1 and Shard 2 in the diagram). This is done automatically and documents are sent in batches between Shards. If a Shard has one or more replicas (labeled Shard 1 replica and Shard 2 replica in the diagram) a document will get replicated to one or more replicas. Unlike in traditional master-slave Solr setups where index/shard replication is performed periodically in batches, replication in SolrCloud is done in real-time. This is how Distributed Indexing works at the high level. We simplified things a bit, of course – for example, there is no ZooKeeper or overseer shown in our diagram.

Setup Details

All configuration files are stored in ZooKeeper. If you are not familiar with ZooKeeper you can think of it as a distributed file system where SolrCloud configuration files are stored. When the first Solr instance in a SolrCloud cluster is started configuration files need to be sent to ZooKeeper and one needs to specify how many shards there should be in the cluster. Then, this Solr instance/node is running one can start additional Solr instances/nodes and point them to the ZooKeeper instance (ZooKeeper is actually typically deployed as a quorum or 3, 5, or more instances in production environments). And voilà – the SolrCloud cluster is up! I must say, it’s quite simple and straightforward.

Shard Replicas in SolrCloud serve multiple purposes. They provide fault tolerance in the sense that when (not if!) a single Solr instance/node containing a portion of the index goes down, you still have one or more replicas of data that was served by that instance elsewhere in the cluster and thus you still have the whole data set and no data loss. They also allow you to spread query load over more servers, this making the cluster capable of handling higher query rates.

Indexing

As you saw above, the new SolrCloud really simplifies Distributed Indexing. Document distribution between Shards and Replicas is automatic and real-time. There is no master server one needs to send all documents to. A document can be sent to any SolrCloud instance and SolrCloud takes care of the rest. Because of this, there is no longer a SPOF (Single Point of Failure) in Solr. Previously, Solr master was a SPOF in all but the most elaborate setups.

Querying

One can query SolrCloud a few different ways:

- One can query a single Shard, which is just like Solr querying a search a single Solr instance.

- The second option is to query a single Collection (i.e., search all shards holding pieces of a given Collection’s index).

- The third option is to only query some of the Shards by specifying their addresses or names.

- Finally, one can query multiple Collections assuming they are compatible and Solr can merge results they return.

As you can see, lots of choices!

Administration with Core Admin

In addition to the standard core admin parameters there are some new ones available in SolrCloud. These new parameters let one:

- create new Shards for an existing Collection

- create a new Collection

- add more nodes

- …

The Future

If you look at the New SolrCloud Design wiki page (https://wiki.apache.org/solr/NewSolrCloudDesign) you will notice, that not all planned features have been implemented yet. There are still things like cluster re-balancing or monitoring (if you are using SolrCloud already and want to monitor its performance, let us know if you want early access to SPM for SolrCloud) to be done. Now that SolrCloud is in the Solr trunk, it should see more user and more developer attention. We look forward to using SolrCloud in more projects in the future!